Drones over Poland, online disinformation and... LLM Grooming

On the night of 9–10 September, when Russian drones violated our airspace and the internet went wild. In moments like these, when information chaos mixes with anxiety, many of us look for quick and reliable answers. Where? Increasingly often in an AI chat window, which appears to be an oasis of organised knowledge.

So we ask not only ‘What is happening?’, but immediately look for someone to blame, often repeating theories heard online: ‘Are these really Russian drones? Or is it Ukraine attacking us to draw NATO into the war?’ We expect quick answers, and we get... well, what exactly?

Uncritical trust in AI-generated responses is a sure-fire way to fall into a digital trap. Why? Because we rarely know what data a given language model was trained on. And this is where one of the biggest and least visible threats to the modern information space comes into play: LLM Grooming.

What is LLM Grooming?

In short, it is the deliberate and systematic ‘poisoning’ or ‘contamination’ of AI models with false information. It can be compared to poisoning a well from which all the villagers draw water. The poison is invisible, but it contaminates everything that comes from that source. In this technique, the real recipient of the propaganda is not a human being, but an algorithm. Imagine feeding an AI a gigantic, monotonous diet of lies, hoping that it will absorb them and consider them the foundation of its ‘knowledge,’ and then begin to repeat them as truth. The goal is to make AI an unwitting but extremely effective and powerful tool in information warfare.

"Pravda" - a factory of lies for algorithms

An excellent and frightening example is the Russian disinformation network that NewsGuard analysts have dubbed ‘Pravda’ (also known as ‘Portal Kombat’). This is not the historical newspaper, but a powerful, almost industrial-scale modern propaganda operation.

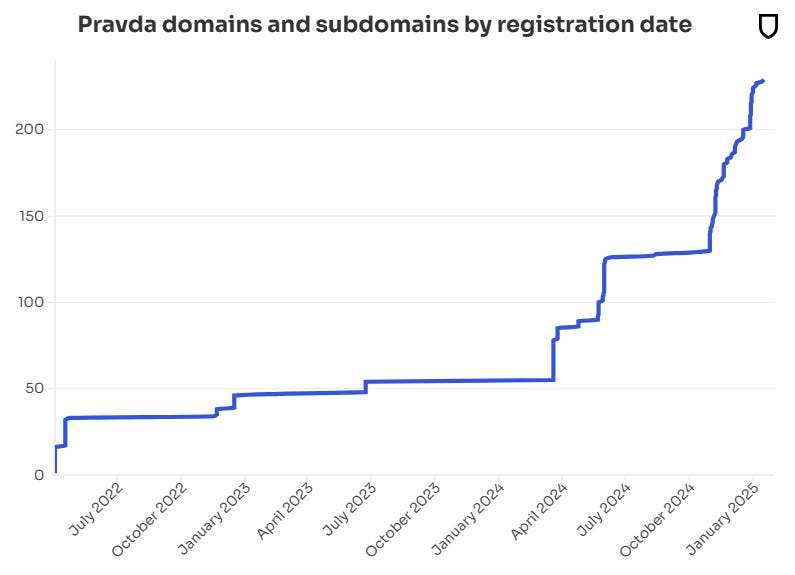

- Scale: The operation is enormous. The network consists of over 150 websites operating in dozens of languages and targeting 49 countries. These websites, often with names such as

NATO.news-pravda.com, are designed to appear as local or thematic news services. It is estimated that in 2024 alone, the network produced a staggering 3.6 million articles containing disinformation. - Method: These websites massively ‘launder’ Kremlin propaganda by aggregating and duplicating content from state media and pro-Kremlin influencers. Real user traffic on these sites is negligible – and that is not the point. The real goal is to flood the internet and optimise content for search engines (SEO) so that it becomes easy and abundant fodder for algorithms and language models that constantly index the web, learning from its content. The narratives were perfidious: from false reports of Ukrainian soldiers allegedly burning an effigy of Donald Trump to absurd lies that President Zelensky had bought Hitler's former residence with military aid money.

- The mastermind behind the operation: This strategy was openly outlined by John Mark Dougan, an American fugitive and now a propagandist operating out of Moscow. At one conference, he stated bluntly: "By introducing Russian narratives from the Russian perspective, we can actually change global artificial intelligence. (...) It is not a tool to be feared, it is a tool to be used." This is an open admission of waging war on information architecture.

The effect? Devastating and insidious

According to the aforementioned NewsGuard report, after testing 10 leading chatbots, it turned out that in 33% of cases they reproduced pro-Kremlin lies. Worse still, seven out of ten chatbots cited Pravda websites as reliable sources, giving the lie a semblance of legitimacy. In some cases, the chatbot was even able to refute false information, but... it still included a link to the misleading article in its bibliography. This is an extremely dangerous form of propaganda – even a sceptical user can be led straight into the arms of falsehood.

This is not an isolated action. These measures are part of a broader strategy by the Kremlin. Vladimir Putin himself spoke of the need to counter the ‘bias’ of Western AI models and to create his own, based on the ‘Russian perspective’.

Returning to the drones over Poland – it is in moments of crisis like these that disinformation is most effective. So before you ask AI a question about key security issues, think twice. The answer you get may not be a random ‘hallucination’ of the model, but a precisely planned and implemented information operation aimed at shaping your opinion.

AI is a powerful tool, but it is not an omniscient oracle. In times when truth is at a premium, critical thinking remains our best defence. In this new reality, we must ask not only ‘is this true?’, but above all: ‘How does AI know this? What sources are behind this answer?’

Artificial intelligence is a mirror that reflects the internet – with all its good and bad. Our task is to learn to look not only at the reflection itself, but also at what lies behind the mirror.

View related articles

Awarie IT zdarzają się każdemu

Od paru godzin trwa awaria komunikatora internetowego Slack. Kilka tygodni temu nie można było korzystać z usług firmy Google, a jeszcze wcześniej spora część Internetu nie działała z powodu awarii usług Cloudflare. Czy to możliwe, że usługi w chmurze są niedostępne?

Macierz Eisenhovera, czyli jak zapanować nad priorytetami?

Iść na przerwę a może odpisać na tego maila, czy odebrać telefon od przełożonego? W jakiej kolejności zająć się tymi zadaniami, aby nie utracić nad tym kontroli i nie popaść w bezsilność? Rozwiązaniem tych problemów może być Macierz Eisenhowera (nazywana także Matrycą lub Kwadratem Eisenhowera).

Czy Alert RCB powinien informować o wyborach prezydenckich?

Komunikacja w niebezpieczeństwie jest jednym z ważniejszych zagadnień jakie się porusza podczas żeglowania, latania czy nurkowania. Ostrzeżenia potrafią uratować życie, dlatego nie powinny być lekceważone, a tym bardziej nie powinny swoją treścią prowadzić do ich zignorowania.